An official website of the United States government

United States Department of Labor

United States Department of Labor

Crossref 0

Benchmarking the Current Employment Statistics survey: perspectives on current research, Monthly Labor Review, 2017.

We propose an improved procedure for benchmarking employment estimates from the Current Employment Statistics (CES) survey. The procedure involves more frequent updating of data, whereby seasonally adjusted CES estimates are benchmarked to seasonally adjusted Quarterly Census of Employment and Wages estimates on a quarterly, rather than annual, basis. We provide simulation results illustrating the advantages of the method, which can be used for benchmarking both national estimates and state and area estimates.

The U.S. Bureau of Labor Statistics (BLS) Current Employment Statistics (CES) survey is a quick-response business survey that provides information on employment, hours, and earnings each month.1 The CES program benchmarks its employee series in order to re-anchor sample-based employment estimates to full population counts. This process is designed to improve the accuracy of the CES all-employee series by replacing estimates with full population counts that are not subject to the sampling or modeling errors inherent in the CES monthly estimates. These population counts, derived from administrative records, are much less timely than the sample-based estimates, but provide a near census of establishment employment. The CES program is examining possible improvements in its benchmarking procedures.

The major source of benchmark data for the CES survey is the Quarterly Census of Employment and Wages (QCEW) program, which collects employment and wage data from states’ unemployment insurance (UI) tax records. All businesses subject to UI laws are required to report, on a quarterly basis, employment and wage information to the appropriate state workforce agency. UI records cover about 97 percent of nonfarm wage and salary jobs on civilian payrolls. Benchmark data for the remaining 3 percent of CES employment are constructed from alternative sources, primarily the County Business Patterns series and the Annual Survey of Public Employment and Payroll publications of the U.S. Census Bureau. Benchmark employment, also called population employment, is the sum of the QCEW employment count and the noncovered employment estimate from these other sources. In the rest of this article, the term QCEW denotes QCEW plus noncovered employment.

The size of the benchmark, or March, revision is equal to the difference between the QCEW and CES estimates of March employment. It is widely regarded as a measure of the accuracy of CES estimates. For the national total nonfarm series, absolute annual benchmark revisions averaged about 0.3 percent over the past decade. For national series, only March sample-based estimates are replaced with population data. In contrast, for state and metropolitan area series, all available months of population data are used to replace sample-based estimates.

BLS is exploring ways to improve the benchmarking procedures. The discussion in this article is an outgrowth of the work of the CES benchmarking team.2 Two improvements to the benchmarking procedures are being considered. First, the CES program is looking into the possibility of benchmarking the CES quarterly instead of annually. More frequent benchmarking will provide more timely revisions and generally reduce the size of the March revisions. Second, the program is exploring the possibility of adopting the same benchmarking procedure for state and metropolitan area estimates as that used for national estimates. This will achieve greater consistency between national estimates and state and metropolitan area estimates. Although the empirical work and simulation exercise presented in this article are confined to the national all-employee series, the analysis has broader applicability. The theoretical results and the results of the simulation exercise apply equally to any series, be it total or industry, or national or local.

Benchmarking the nonseasonally adjusted CES series to the nonseasonally adjusted QCEW series throughout the year is problematic because of the substantial difference in the seasonal patterns of those series.3 The QCEW has always shown larger seasonal movements than the CES, both before and after the CES conversion to a probability sample design in 2003. Consequently, the benchmarking team is proposing to benchmark seasonally adjusted CES estimates to seasonally adjusted QCEW estimates.

This article is organized as follows. In the next section, we describe the benchmarking procedure currently used for the national series. We then discuss how more frequent benchmarking can affect the March revision. With this information as background, we present the proposed quarterly benchmarking procedure and examine results from its implementation for the total private CES employment estimates.4 We conclude with a simulation exercise to evaluate our proposed procedure when there are potential errors in both the CES and the QCEW and when seasonal factors are measured with error.

Newly benchmarked CES national estimates are released with the January Employment Situation in early February of each year. Nonseasonally adjusted data are revised for 21 months, and seasonally adjusted data are revised for 5 years. For example, with the March 2012 benchmark release, nonseasonally adjusted data were revised from April 2011 through December 2012, and seasonally adjusted data were revised from January 2008 through December 2012.

Like all sample surveys, the CES survey is susceptible to two sources of error: sampling error and nonsampling error. Sampling error is present any time a sample is used to make inferences about a population. The magnitude of the sampling error, or the variance, relates directly to sample size and the percentage of the universe covered by the sample. The CES survey captures slightly under one-third of the universe employment each month, which is exceptionally high by usual sampling standards. This coverage ensures a relatively small sampling error at the total nonfarm employment level for the statewide and national series. Both the universe counts and the CES estimates are subject to nonsampling errors common to all censuses and surveys—coverage, response, nonresponse, and processing errors. The error structures for both the CES monthly survey and the UI universe are complex. Still, the two programs generally produce consistent total employment figures. Over the last decade, annual benchmark revisions at the national total nonfarm level have averaged 0.3 percent (in absolute terms), with an absolute range of 0.1 percent to 0.7 percent over the past decade.

While the benchmark revision is often regarded as a proxy for total survey error, this interpretation does not consider error in the benchmark source data. The employment counts obtained from quarterly UI tax forms are administrative data that reflect employer recordkeeping practices and differing state laws and procedures. The benchmark revision can be more precisely interpreted as the difference between two independently derived employment counts, each subject to its own error sources. Overall, however, the universe employment counts are subject to less error than the CES sample-based estimates and therefore serve as a valuable input data to improve the accuracy of the CES through benchmarking.

At the time of annual benchmarking, the monthly sample-based estimates for the 11 months preceding and the 9 months following the March benchmark are also subject to revision. Each annual benchmark revision affects 21 months of data for nonseasonally adjusted series.5

Monthly estimates for the 11 months preceding the March benchmark are recalculated with the use of a “wedge-back” procedure. In this procedure, the difference between the final benchmark level and the previously published March sample estimate is calculated and distributed back across the previous 11 months. The wedge is linear: eleven-twelfths of the March difference is added to the February estimate, ten-twelfths to the January estimate, and so on, back to the previous April estimate, which receives one-twelfth of the March difference. This method assumes that the total estimation error (in levels) since the last benchmark accumulated at a steady rate throughout the benchmark reference year.

Estimates for the 9 months following the March benchmark also are recalculated each year.6 These postbenchmark estimates reflect the application of final sample-based monthly changes to new benchmark levels for March. The sample changes are the ones calculated and used for the previously published final sample-based estimates.

Recall that we have no knowledge about the individual monthly errors, but we do have information about the total error from the size of the March revision. The natural choice of an error-correction model would be to assume that the original rates are all mismeasured by different amounts, but that the implied errors in monthly employment sum to the March discrepancy. In a typical year, one would expect monthly errors to be roughly independent of each other and to have roughly constant variance. In years with a large March revision (typically at turning points in the cycle), the errors are likely to be correlated, possibly resulting from errors in the estimate of the birth–death factor.7

If one uses only the March QCEW, there is no alternative to wedging back for an entire year. However, if one benchmarks more frequently, using the QCEW at other times, then one will need to wedge back for a shorter period of time, mitigating the issue.

Although the March employment revision does not provide information about individual monthly errors, the March estimate does provide a natural diagnostic tool for evaluating the effect of more frequent updating. To facilitate the discussion, it is helpful to introduce some notation. Letting ![]() denote the March employment count from the QCEW in year

denote the March employment count from the QCEW in year ![]() and letting

and letting ![]() denote the initial CES estimate of March employment in year

denote the initial CES estimate of March employment in year ![]() , we can express the annual March revision as

, we can express the annual March revision as

(1) ![]() .

.

Letting ![]() denote the CES estimate of the employment growth rate in month

denote the CES estimate of the employment growth rate in month ![]() and letting

and letting ![]() be the true employment growth rate in month

be the true employment growth rate in month ![]() ,8 we can rewrite equation (1) as

,8 we can rewrite equation (1) as

(2) ![]()

![]() .

.

In interpreting equation (2), note that if ![]() , the estimated CES growth rate in month

, the estimated CES growth rate in month ![]() contains no error. If

contains no error. If ![]() , the CES overstates employment growth in month

, the CES overstates employment growth in month ![]() , and if

, and if ![]() , the CES underestimates that growth. Equation (2) makes explicit the relationship between the monthly errors and the March revision—namely, the March revision depends on the cumulative (over-the-year) monthly errors in the CES rates, and the closer the cumulative error is to zero, the smaller the March revision. The March revision tells us very little about the individual monthly errors in the CES rates. Indeed, one would hope that monthly errors largely average out, leaving us with a relatively small revision. A large March revision likely means that monthly errors in a given year are highly correlated. An example of this was the start of the Great Recession (2007–09), when CES underestimated the sizes of the early employment losses. Throughout this article, we focus on quarterly updating.9

, the CES underestimates that growth. Equation (2) makes explicit the relationship between the monthly errors and the March revision—namely, the March revision depends on the cumulative (over-the-year) monthly errors in the CES rates, and the closer the cumulative error is to zero, the smaller the March revision. The March revision tells us very little about the individual monthly errors in the CES rates. Indeed, one would hope that monthly errors largely average out, leaving us with a relatively small revision. A large March revision likely means that monthly errors in a given year are highly correlated. An example of this was the start of the Great Recession (2007–09), when CES underestimated the sizes of the early employment losses. Throughout this article, we focus on quarterly updating.9

At present, there is a 10-month lag between the QCEW reference period and the time that the QCEW employment figures are available for benchmarking. Consequently, CES estimates are currently revised every January. With quarterly benchmarking, CES estimates would also be revised about 10 months after each quarter, using the June, September, and December QCEW numbers.10

For convenience, in the analysis that follows, we work with the proportional error ![]() in the CES estimated rate of change in month

in the CES estimated rate of change in month ![]() employment. This error may be expressed as

employment. This error may be expressed as ![]() and substituted in equation (2):11

and substituted in equation (2):11

(2’) ![]()

The initial CES estimate of March employment in year t consists of two parts: the March QCEW employment level in year ![]() and the product of CES monthly growth-rate estimates from April of year

and the product of CES monthly growth-rate estimates from April of year ![]() to March of year

to March of year ![]() . Using a similar derivation as that used earlier to obtain equation (2), one can show that this estimate is equal to

. Using a similar derivation as that used earlier to obtain equation (2), one can show that this estimate is equal to

(3) ![]() ,

,

where ![]() is the proportional error in the CES estimate in quarter

is the proportional error in the CES estimate in quarter ![]() of year

of year ![]() . The estimate is again composed of two parts: the March QCEW employment level in year

. The estimate is again composed of two parts: the March QCEW employment level in year ![]() and the product of the quarterly errors in the CES rates from the second quarter of year

and the product of the quarterly errors in the CES rates from the second quarter of year ![]() to the first quarter of year

to the first quarter of year ![]() .

.

Let us assume for the moment that the June QCEW employment level in year ![]() is the true population value.12 Incorporating QCEW information for the second quarter (June) of year

is the true population value.12 Incorporating QCEW information for the second quarter (June) of year ![]() into the CES estimate when it first becomes available (in April of year

into the CES estimate when it first becomes available (in April of year ![]() ) results in a revised March estimate that can be written as

) results in a revised March estimate that can be written as

(4) ![]() .

.

Essentially, including the June QCEW information in year ![]() into the March estimate in year

into the March estimate in year ![]() eliminates the errors in the CES monthly rates from April to June of year

eliminates the errors in the CES monthly rates from April to June of year ![]() . The variance of the revised estimate,

. The variance of the revised estimate, ![]() , around the true population value is therefore lower than the variance of the initial estimate,

, around the true population value is therefore lower than the variance of the initial estimate, ![]() , around the true population value.13 The next revision in July will yield a still better estimate and, of course, the same will be true of the October revision.

, around the true population value.13 The next revision in July will yield a still better estimate and, of course, the same will be true of the October revision.

Using the March estimate as a convenient benchmark, we have shown that more frequent updating yields improved employment estimates. But what can we say about the revisions themselves? Let ![]() denote the first March revision in April, and let

denote the first March revision in April, and let ![]() ,

, ![]() , and

, and ![]() denote, respectively, the second, third, and fourth March revisions. It is straightforward to show that

denote, respectively, the second, third, and fourth March revisions. It is straightforward to show that

(5) ![]() .

.

Equation (5) reflects that the four quarterly benchmark revisions sum to the benchmark revision when we benchmark only annually. One can also show that

(6) ![]() ,

,

with equality holding if and only if the quarterly benchmark revisions are all positive or all negative. When CES errors are opposite signed in different quarters, the revision in one quarter will at least partially offset that in another quarter. This leads to a seeming puzzle. Earlier, we saw that each revision yielded a better estimate of March employment than the previous one. If this is so, why should the revisions be offsetting, with a positive revision in one quarter being followed by a negative revision in a subsequent quarter or vice versa?

A positive revision in, say, April of year ![]() means that the CES initially underestimated employment growth from April to June in year

means that the CES initially underestimated employment growth from April to June in year ![]() . Conversely, a negative revision in, say, July of year

. Conversely, a negative revision in, say, July of year ![]() means that the CES initially overerestimated employment growth from July to September in year

means that the CES initially overerestimated employment growth from July to September in year ![]() . Occurrences like these should be common if errors in the CES are uncorrelated over time. When the initial errors in different quarters are in different directions, the revisions (which will be opposite signed from the errors) will also be. Actually, having quarterly revisions that are not all of the same sign should be reassuring, because this means that the CES program is not making systematic errors. When the CES errors are all in the same direction, so will be the revisions. Quarterly benchmarking is especially valuable in such cases, because it allows us to begin correcting the systematic errors more quickly than annual benchmarking would.14

. Occurrences like these should be common if errors in the CES are uncorrelated over time. When the initial errors in different quarters are in different directions, the revisions (which will be opposite signed from the errors) will also be. Actually, having quarterly revisions that are not all of the same sign should be reassuring, because this means that the CES program is not making systematic errors. When the CES errors are all in the same direction, so will be the revisions. Quarterly benchmarking is especially valuable in such cases, because it allows us to begin correcting the systematic errors more quickly than annual benchmarking would.14

In sum, offsetting quarterly revisions should not be a reason for concern. They suggest that the CES program is not making systematic errors. In those hopefully rare occasions when errors are correlated (which tend to occur at turning points in the business cycle), quarterly benchmarking provides an important safeguard against systematic errors being baked into the estimates for an unnecessarily long period.

It is well documented that the QCEW and CES series have different seasonal patterns.15 These seasonal patterns are, of course, not an issue if one benchmarks annually. However, they must be accounted for if one benchmarks more frequently. BLS has examined several methods for dealing with the different seasonal patterns in the QCEW. It has determined that it is best to explicitly model and estimate the seasonal patterns in CES and QCEW data, because methods that implicitly adjust for seasonality have been found lacking.

The proposed procedure is simple. We assume that the ratio of the seasonally adjusted quarterly growth rate from the QCEW to the seasonally adjusted quarterly growth rate from the CES is an unbiased, albeit noisy, signal of the over-the-quarter error term. When a new quarter of QCEW data becomes available, the proposed method adjusts monthly rates in that quarter by a constant determined by the ratio of seasonally adjusted quarterly growth rates. Mathematically, the adjusted monthly growth rate can be expressed as

(7)  ,

,

where ![]() denotes the original CES estimate of the rate of change in employment in month

denotes the original CES estimate of the rate of change in employment in month ![]() of quarter

of quarter ![]() ,

, ![]() denotes the benchmarked CES estimate of the rate of change in employment in month

denotes the benchmarked CES estimate of the rate of change in employment in month ![]() of quarter

of quarter ![]() ,

, ![]() denotes the seasonally adjusted rate of change in QCEW employment in quarter

denotes the seasonally adjusted rate of change in QCEW employment in quarter ![]() , and

, and ![]() denotes the seasonally adjusted CES estimate of the rate of change in employment in quarter

denotes the seasonally adjusted CES estimate of the rate of change in employment in quarter ![]() .16

.16

As an example, suppose that the estimated CES employment growth rate is 0.997, 1.0025, and 1.01 in April 2014, May 2014, and June 2014, respectively. In addition, suppose that the seasonally adjusted CES growth rate from April to June 2014 is 1.01 and that the seasonally adjusted QCEW growth rate over the same period is 1.007. Because the seasonally adjusted CES growth rate from April to June 2014 exceeds the seasonally adjusted QCEW growth rate, our benchmark procedure will lower the estimated employment growth throughout the quarter. Specifically, since  , the benchmarked growth rate for April 2014 is given by .997 × .999 = .996. Similarly, the benchmarked growth rates for May and June 2014 are, respectively, 1.0025 × .999 = 1.0015 and 1.01 × .999 = 1.009. Therefore, the benchmarked growth rate over the quarter is given by .996 × 1.0015 × 1.009 = 1.0065.

, the benchmarked growth rate for April 2014 is given by .997 × .999 = .996. Similarly, the benchmarked growth rates for May and June 2014 are, respectively, 1.0025 × .999 = 1.0015 and 1.01 × .999 = 1.009. Therefore, the benchmarked growth rate over the quarter is given by .996 × 1.0015 × 1.009 = 1.0065.

Let ![]() denote the true quarterly growth rate, and let

denote the true quarterly growth rate, and let ![]() denote the proportional error in the adjustment. This error has two possible sources, namely, error in the estimation of the seasonal factors or error in the QCEW itself. Multiplying the monthly rates in equation (7) over the quarter, one can show that the benchmarked quarterly growth rate,

denote the proportional error in the adjustment. This error has two possible sources, namely, error in the estimation of the seasonal factors or error in the QCEW itself. Multiplying the monthly rates in equation (7) over the quarter, one can show that the benchmarked quarterly growth rate, ![]() , is given by

, is given by

(8) ![]() .

.

Having calculated the adjusted rates for the months in quarter ![]() , one can readily obtain revised employment growth estimates for quarter

, one can readily obtain revised employment growth estimates for quarter ![]() . These estimates yield a revised estimate for end-of-quarter employment, which, in turn, leads to a revised employment level in subsequent months. For example, in May 2015, we would obtain corrected values for April 2014–March 2015 employment growth. The corrected values from April 2014 to June 2014 are obtained by applying the adjusted rates for the period April 2014–June 2014. The corrected values from July 2014 to March 2015 are obtained by applying the new June 2014 employment base, but using the initial CES growth rates. For example, the revised estimate for March 2015 is given by

. These estimates yield a revised estimate for end-of-quarter employment, which, in turn, leads to a revised employment level in subsequent months. For example, in May 2015, we would obtain corrected values for April 2014–March 2015 employment growth. The corrected values from April 2014 to June 2014 are obtained by applying the adjusted rates for the period April 2014–June 2014. The corrected values from July 2014 to March 2015 are obtained by applying the new June 2014 employment base, but using the initial CES growth rates. For example, the revised estimate for March 2015 is given by

(9) ![]() .

.

Similarly, revised estimates could be published when QCEW estimates in September and December become available.

Continuing with our example, suppose that the QCEW employment estimate in March 2014 is 120 million. Also, suppose that the accumulated growth rate from July 2014 through March 2015 is 1.02 (that is, suppose that ![]() ). Then the revised estimate in May 2015 for March 2015 employment is given by 120,000,000 × 1.0065 × 1.02 = 123,200,000.

). Then the revised estimate in May 2015 for March 2015 employment is given by 120,000,000 × 1.0065 × 1.02 = 123,200,000.

In the February revision, as in the three preceding revisions, adjusted growth rates for the months of January, February, and March of the previous year are calculated according to equation (7). These adjusted growth rates in turn yield revised estimates for employment growth for the January–March period. However, the published revision in January also includes a second component arising from the fact that the adjusted quarterly growth rates will have some error. This component, given by the difference between actual March QCEW employment and the employment level predicted from the adjusted quarterly growth rates, reflects errors in the estimation of the seasonal factors.

Table 1 shows the revisions that result from applying the previously outlined method to revising estimates of total private employment for the period 2007–13.17 Although the March revision does not tell us much about the accuracy of the individual monthly errors, it is nevertheless an informative statistic. Recall from our earlier discussion that a large revision based on annual benchmarking suggests that the monthly estimates are correlated over the past year and therefore do not average out to zero.

| Date | QCEW value | Original CES estimate | Revised estimate as of April | Revised estimate as of July | Revised estimate as of October | Revised estimate as of January (never published) |

|---|---|---|---|---|---|---|

| Employment estimates | ||||||

| March 2007 | 108,250,800 | 109,124,676 | 109,179,508 | 108,977,936 | 108,893,811 | 108,365,917 |

| March 2008 | 105,535,500 | 106,097,960 | 105,951,628 | 105,626,170 | 105,679,140 | 105,570,462 |

| March 2009 | 107,565,200 | 107,194,505 | 107,355,770 | 107,415,776 | 107,415,776 | 107,467,022 |

| March 2010 | 110,009,400 | 109,777,090 | 109,604,541 | 110,096,767 | 109,795,585 | 110,155,060 |

| March 2011 | 112,699,400 | 112,152,430 | 112,265,409 | 112,076,221 | 111,923,700 | 112,427,355 |

| March 2012 | 114,907,500 | 114,940,754 | 115,001,394 | 114,969,354 | 114,991,067 | 114,855,962 |

| March 2013 | 117,717,000 | 117,925,267 | 118,033,062 | 117,982,639 | 118,048,168 | 117,688,749 |

| Signed difference between estimate and QCEW | ||||||

| March 2007 | — | 873,876 | 928,708 | 727,136 | 643,011 | 115,117 |

| March 2008 | — | 562,460 | 416,128 | 90,670 | 143,640 | 34,962 |

| March 2009 | — | -370,695 | -209,430 | -149,424 | -149,424 | -98,178 |

| March 2010 | — | -232,310 | -404,859 | 87,367 | -213,815 | 145,660 |

| March 2011 | — | -546,970 | -433,991 | -623,179 | -775,700 | -272,045 |

| March 2012 | — | 33,254 | 93,894 | 61,854 | 83,567 | -51,538 |

| March 2013 | — | 208,267 | 316,062 | 265,639 | 331,168 | -28,251 |

| Note: QCEW = Quarterly Census of Employment and Wages; CES = Current Employment Statistics. Source: U.S. Bureau of Labor Statistics. | ||||||

Looking at the estimates in the bottom panel of table 1, we see that the estimate for March employment generally improves as it is revised throughout the year. The improvement is quite noticeable for some years, especially those in which the initial CES estimate differs substantially from the QCEW value. The estimates in the last column simply reflect the errors in the estimation of the seasonal factors. These estimates indicate how well the adjustment model is estimating March employment. In every year but one, the absolute error stemming from the estimation of the seasonal factors is smaller than the error in the initial CES estimate (compare the last and second columns of the table). At the very least, this smaller error suggests that the ratio of the seasonally adjusted QCEW quarterly growth rate to the seasonally adjusted CES quarterly growth rate contains some useful information regarding the nature of monthly errors in CES growth rates. This information is particularly useful when the monthly errors are correlated, as they seem to be from April 2008 to March 2009 and from April 2009 to March 2010. The large negative revision indicates that the CES may be overestimating the growth rate in those years, and it is noteworthy that the quarterly rate adjustments are always negative. This suggests that capitalizing on the information contained in non-March QCEW data may give an earlier (earlier than the next March) indication that the CES is systematically overestimating or underestimating the growth rates.

The results presented so far strongly indicate that the proposed benchmark procedure results in an improved March estimate. Although the revised quarterly estimates likely improve on the initial CES estimates, there is insufficient information to show this definitively (after all, if we knew the true quarterly estimates, we would not need the benchmarking procedure). To handle this issue, we now perform a simulation exercise.

The simulation exercise also addresses another important question. The success of the proposed benchmarking procedure depends crucially on how accurately we estimate the seasonal factors. As one obtains finer CES estimates by industry or area, the estimates will have a larger error component. This, in and of itself, makes the proposed benchmarking procedure more advantageous. However, there is an offsetting effect: the greater the error in the CES estimate, the greater the errors in the resulting estimates of the seasonal factors. At some point, will the errors in the estimates of the seasonal factors be sufficiently great that the quarterly benchmarking procedure actually results in errors that exceed those in the unadjusted CES estimates?

In laying out our simulation model, we let the “true” employment growth that we would like to measure with the CES be given by ![]() , where

, where ![]() represents a seasonal factor. The CES estimate of employment growth in quarter

represents a seasonal factor. The CES estimate of employment growth in quarter ![]() of year

of year ![]() is then given by

is then given by

(10) ![]() ,

,

where ![]() is the error in the CES estimate of the growth rate.

is the error in the CES estimate of the growth rate.

We are seemingly implicitly assuming that the seasonal variation in the CES series represents true seasonal variation in the underlying employment. In reality, the seasonal factors could also reflect systematic seasonal errors in the CES. However, without additional information, one cannot distinguish empirically between true seasonal variation in the underlying employment series and seasonal variation that reflects systematically seasonal measurement error.

Similarly to the way we treat CES employment, we let the QCEW estimate of employment growth be given by

(11) ![]() .

.

Note that we are no longer assuming that the QCEW growth rate is measured without error. Rather, like the CES, the QCEW has an error with a random component ![]() . In addition, there is a QCEW seasonal factor that may partly reflect QCEW measurement error that has a systematic seasonal component.

. In addition, there is a QCEW seasonal factor that may partly reflect QCEW measurement error that has a systematic seasonal component.

In our simulations, we normalize ![]() to 1 (i.e., no employment change) for all four quarters

to 1 (i.e., no employment change) for all four quarters ![]() and all years

and all years ![]() (

(![]() runs from 1 to 5). We set the seasonal factors to be the following:

runs from 1 to 5). We set the seasonal factors to be the following:

(12) ![]() ,

, ![]() ,

, ![]() ,

, ![]()

(13) ![]() ,

, ![]() ,

, ![]() ,

, ![]()

Note from equations (12) and (13) that we have set the QCEW to be more seasonal than the CES.18

Finally, we assume that the random errors in the CES and the QCEW are normally distributed, with respective variances ![]() and

and ![]() . Let

. Let ![]() denote the ratio of these variances:

denote the ratio of these variances:

(14) ![]() .

.

In the simulations to follow, we allow both ![]() and

and ![]() to vary. For a given value of

to vary. For a given value of ![]() , a lower

, a lower ![]() is equivalent to a decrease in

is equivalent to a decrease in ![]() . Thus, the lower is

. Thus, the lower is ![]() , the more precise is the QCEW relative to the CES. We should expect the increased precision of the QCEW to lead to improved performance of the benchmark estimator relative to the initial CES estimator. For a given

, the more precise is the QCEW relative to the CES. We should expect the increased precision of the QCEW to lead to improved performance of the benchmark estimator relative to the initial CES estimator. For a given ![]() , a higher value of

, a higher value of ![]() also means an increase in

also means an increase in ![]() . As a consequence, both the initial CES estimate and the benchmarked estimate will be less precise. There is another likely effect of an increase in

. As a consequence, both the initial CES estimate and the benchmarked estimate will be less precise. There is another likely effect of an increase in ![]() and

and ![]() . The greater these variances are, the less precise the estimates of the seasonal factors are likely to be. The result is a less precise benchmarked estimate. The net effect on the performance of the benchmark estimator relative to the initial CES estimator is an open question. It is not obvious a priori whether, for a given value of

. The greater these variances are, the less precise the estimates of the seasonal factors are likely to be. The result is a less precise benchmarked estimate. The net effect on the performance of the benchmark estimator relative to the initial CES estimator is an open question. It is not obvious a priori whether, for a given value of ![]() , a greater

, a greater ![]() is associated with improved or worsened performance of the benchmark estimator relative to the initial CES estimator.

is associated with improved or worsened performance of the benchmark estimator relative to the initial CES estimator.

For any given combination of ![]() and

and ![]() , we ran 1,000 simulations of both

, we ran 1,000 simulations of both ![]() and

and ![]() . These simulations yielded 1,000 estimates of the original CES estimate,

. These simulations yielded 1,000 estimates of the original CES estimate, ![]() , and the proposed CES estimate,

, and the proposed CES estimate, ![]() , for all quarters for 5 years. The X12 procedure in SAS was used to estimate seasonal factors.

, for all quarters for 5 years. The X12 procedure in SAS was used to estimate seasonal factors.

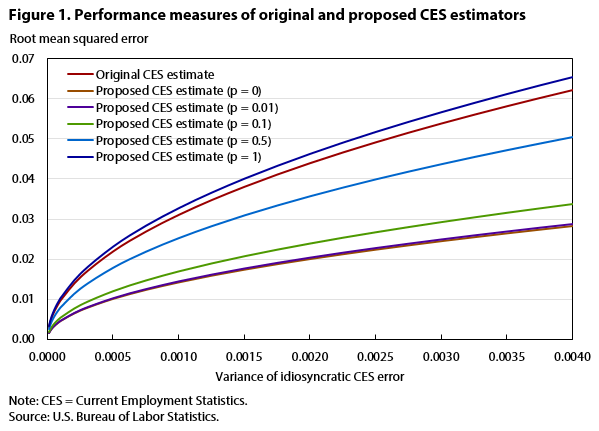

Figures 1, 2, and 3 summarize our simulation results. First consider the curve labeled “Original CES estimate” in figure 1. Noting that the root mean squared error (RMSE) is graphed on the vertical axis and ![]() on the horizontal, we see that, as expected, the RMSE of the CES estimate increases with

on the horizontal, we see that, as expected, the RMSE of the CES estimate increases with ![]() .

.

| Variance of idiosyncratic CES error | Original CES estimate | Proposed CES estimate (p = 0) | Proposed CES estimate (p = 0.01) | Proposed CES estimate (p = 0.1) | Proposed CES estimate (p = 0.5) | Proposed CES estimate (p = 1) |

|---|---|---|---|---|---|---|

| 0.000010 | 0.003117 | 0.001409 | 0.001440 | 0.001692 | 0.002520 | 0.003272 |

| 0.000020 | 0.004408 | 0.001992 | 0.002037 | 0.002393 | 0.003564 | 0.004627 |

| 0.000030 | 0.005398 | 0.002440 | 0.002495 | 0.002931 | 0.004365 | 0.005666 |

| 0.000040 | 0.006233 | 0.002817 | 0.002881 | 0.003385 | 0.005040 | 0.006543 |

| 0.000050 | 0.006969 | 0.003150 | 0.003221 | 0.003784 | 0.005635 | 0.007315 |

| 0.000060 | 0.007634 | 0.003451 | 0.003528 | 0.004145 | 0.006173 | 0.008013 |

| 0.000070 | 0.008246 | 0.003726 | 0.003810 | 0.004477 | 0.006667 | 0.008654 |

| 0.000080 | 0.008815 | 0.003984 | 0.004074 | 0.004786 | 0.007127 | 0.009252 |

| 0.000090 | 0.009350 | 0.004225 | 0.004320 | 0.005076 | 0.007559 | 0.009812 |

| 0.000100 | 0.009856 | 0.004454 | 0.004554 | 0.005351 | 0.007968 | 0.010343 |

| 0.000250 | 0.015583 | 0.007042 | 0.007200 | 0.008459 | 0.012597 | 0.016355 |

| 0.000500 | 0.022038 | 0.009958 | 0.010182 | 0.011963 | 0.017816 | 0.023128 |

| 0.000750 | 0.026991 | 0.012195 | 0.012469 | 0.014650 | 0.021818 | 0.028328 |

| 0.001000 | 0.031167 | 0.014081 | 0.014398 | 0.016916 | 0.025193 | 0.032710 |

| 0.001250 | 0.034845 | 0.015745 | 0.016099 | 0.018913 | 0.028166 | 0.036572 |

| 0.001500 | 0.038171 | 0.017251 | 0.017639 | 0.020720 | 0.030858 | 0.040058 |

| 0.001750 | 0.041229 | 0.018622 | 0.019041 | 0.022371 | 0.033323 | 0.043265 |

| 0.002000 | 0.044076 | 0.019905 | 0.020352 | 0.023913 | 0.035623 | 0.046256 |

| 0.002250 | 0.046750 | 0.021114 | 0.021589 | 0.025366 | 0.037787 | 0.049064 |

| 0.002500 | 0.049279 | 0.022254 | 0.022754 | 0.026736 | 0.039830 | 0.051718 |

| 0.002750 | 0.051684 | 0.023336 | 0.023861 | 0.028039 | 0.041767 | 0.054240 |

| 0.003000 | 0.053982 | 0.024369 | 0.024918 | 0.029282 | 0.043623 | 0.056652 |

| 0.003250 | 0.056186 | 0.025365 | 0.025936 | 0.030478 | 0.045406 | 0.058965 |

| 0.003500 | 0.058307 | 0.026323 | 0.026915 | 0.031628 | 0.047122 | 0.061190 |

| 0.003750 | 0.060354 | 0.027246 | 0.027859 | 0.032736 | 0.048774 | 0.063337 |

| 0.004000 | 0.062333 | 0.028150 | 0.028782 | 0.033817 | 0.050384 | 0.065417 |

| Note: CES = Current Employment Statistics. Source: U.S. Bureau of Labor Statistics. | ||||||

The remaining curves in figure 1 illustrate the performance of the proposed benchmark estimator. Each of these curves is associated with a different value of ![]() . Rightward movement along any of the curves is associated with a higher RMSE. This is, of course, expected, because, for a given

. Rightward movement along any of the curves is associated with a higher RMSE. This is, of course, expected, because, for a given ![]() , a higher

, a higher ![]() also means a higher

also means a higher ![]() . Now consider the effect of variations in

. Now consider the effect of variations in ![]() , recalling that, for a given

, recalling that, for a given ![]() , a lower

, a lower ![]() is associated with a lower value of

is associated with a lower value of ![]() . The lowest curve in the figure is that corresponding to a value of

. The lowest curve in the figure is that corresponding to a value of ![]() equal to 0. This curve lies well below that corresponding to the original CES estimate: when

equal to 0. This curve lies well below that corresponding to the original CES estimate: when ![]() (and therefore

(and therefore ![]() ) is 0, the RMSE of the proposed benchmark estimate is always smaller than that of the original CES estimate for any given value of

) is 0, the RMSE of the proposed benchmark estimate is always smaller than that of the original CES estimate for any given value of ![]() .

.

As ![]() increases, the associated RMSE curve moves up, indicating a degradation in the performance of the proposed benchmark estimator. However, for plausible values of

increases, the associated RMSE curve moves up, indicating a degradation in the performance of the proposed benchmark estimator. However, for plausible values of ![]() , the proposed estimator still yields a substantial performance gain. This gain disappears only when

, the proposed estimator still yields a substantial performance gain. This gain disappears only when ![]() is close to 1. As indicated by the uppermost curve in the figure, when

is close to 1. As indicated by the uppermost curve in the figure, when ![]() (so that

(so that ![]() ), the RMSE of the benchmark estimate exceeds that of the original CES estimate. This reflects the fact that when

), the RMSE of the benchmark estimate exceeds that of the original CES estimate. This reflects the fact that when ![]() , the error in the benchmark estimate stemming from the imperfect estimation of the seasonal factors is not offset by an informational advantage of the QCEW estimate over the CES.

, the error in the benchmark estimate stemming from the imperfect estimation of the seasonal factors is not offset by an informational advantage of the QCEW estimate over the CES.

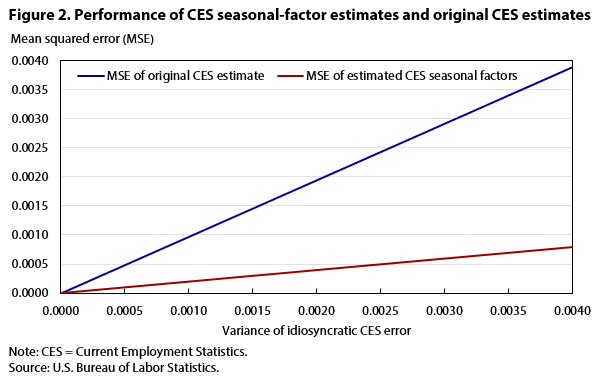

Figure 2 shows the effect of an increase in ![]() on the mean squared error (MSE) of the CES estimate and the MSE of the estimated seasonal factors. As expected, the MSE of the CES estimate increases one for one with the increase in

on the mean squared error (MSE) of the CES estimate and the MSE of the estimated seasonal factors. As expected, the MSE of the CES estimate increases one for one with the increase in ![]() . The MSE of the seasonal factors also increases with the increase in

. The MSE of the seasonal factors also increases with the increase in ![]() , but at a much slower rate (note that the slope of the red curve is well below 1). The same is true for the relationship (not pictured) between the MSE of the QCEW seasonal factors and

, but at a much slower rate (note that the slope of the red curve is well below 1). The same is true for the relationship (not pictured) between the MSE of the QCEW seasonal factors and ![]() . Recall from figure 1 that, for a plausible value of

. Recall from figure 1 that, for a plausible value of ![]() , the RMSE of the proposed estimator is smaller than that of the simple CES estimator for any value of

, the RMSE of the proposed estimator is smaller than that of the simple CES estimator for any value of ![]() . This is indicated by the fact that the RMSE curve for the proposed estimator lies entirely below that for the CES estimator. This result is reasonable in light of our finding in figure 2 that increases in

. This is indicated by the fact that the RMSE curve for the proposed estimator lies entirely below that for the CES estimator. This result is reasonable in light of our finding in figure 2 that increases in ![]() and

and ![]() cause the MSEs in the estimated seasonal factors to increase, but at a slower rate.

cause the MSEs in the estimated seasonal factors to increase, but at a slower rate.

| Variance of idiosyncratic CES error | MSE of original CES estimate | MSE of estimated CES seasonal factors |

|---|---|---|

| 0.00001 | 0.000010 | 0.000002 |

| 0.00002 | 0.000019 | 0.000004 |

| 0.00003 | 0.000029 | 0.000006 |

| 0.00004 | 0.000039 | 0.000008 |

| 0.00005 | 0.000049 | 0.000010 |

| 0.00006 | 0.000058 | 0.000012 |

| 0.00007 | 0.000068 | 0.000014 |

| 0.00008 | 0.000078 | 0.000016 |

| 0.00009 | 0.000087 | 0.000018 |

| 0.00010 | 0.000097 | 0.000020 |

| 0.00025 | 0.000243 | 0.000050 |

| 0.00050 | 0.000486 | 0.000099 |

| 0.00075 | 0.000729 | 0.000149 |

| 0.00100 | 0.000971 | 0.000198 |

| 0.00125 | 0.001214 | 0.000248 |

| 0.00150 | 0.001457 | 0.000298 |

| 0.00175 | 0.001700 | 0.000347 |

| 0.00200 | 0.001943 | 0.000396 |

| 0.00225 | 0.002186 | 0.000446 |

| 0.00250 | 0.002428 | 0.000495 |

| 0.00275 | 0.002671 | 0.000545 |

| 0.00300 | 0.002914 | 0.000594 |

| 0.00325 | 0.003157 | 0.000643 |

| 0.00350 | 0.003400 | 0.000693 |

| 0.00375 | 0.003643 | 0.000742 |

| 0.00400 | 0.003885 | 0.000792 |

| Note: MSE = Mean squared error; CES = Current Employment Statistics. Source: U.S. Bureau of Labor Statistics. | ||

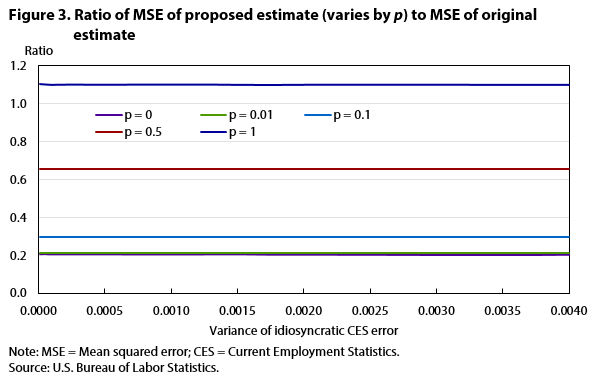

We get an even stronger result in figure 3, which shows the performance of the proposed estimator relative to the performance of the CES estimator. As in figures 1 and 2, we plot ![]() on the horizontal axis. On the vertical axis, we now plot

on the horizontal axis. On the vertical axis, we now plot ![]() , the ratio of the MSE of the proposed estimator to the MSE of the CES. Each curve in the figure corresponds to a different value of

, the ratio of the MSE of the proposed estimator to the MSE of the CES. Each curve in the figure corresponds to a different value of ![]() . As expected, the lower the value of

. As expected, the lower the value of ![]() , the lower the corresponding curve in the figure. This relationship reflects that, for a given value of

, the lower the corresponding curve in the figure. This relationship reflects that, for a given value of ![]() , the performance gain of the benchmark estimator increases as

, the performance gain of the benchmark estimator increases as ![]() falls. Less predictable is the fact that the curves in figure 3 are all (nearly) straight lines with a slope equal to 0. This tells us that the ratio of the MSE of the proposed estimator to the MSE of the CES depends only on

falls. Less predictable is the fact that the curves in figure 3 are all (nearly) straight lines with a slope equal to 0. This tells us that the ratio of the MSE of the proposed estimator to the MSE of the CES depends only on ![]() and not on

and not on ![]() . An increase in

. An increase in ![]() , accompanied by an increase in

, accompanied by an increase in ![]() (so as to maintain a constant

(so as to maintain a constant ![]() ), causes the MSEs of the proposed estimator and the CES estimator to increase by the same proportion. This means that the arithmetic difference between the two MSEs increases (as shown by the distance between the curves in figure 1).

), causes the MSEs of the proposed estimator and the CES estimator to increase by the same proportion. This means that the arithmetic difference between the two MSEs increases (as shown by the distance between the curves in figure 1).

| Variance of idiosyncratic CES error | p = 0 | p = 0.01 | p = 0.1 | p = 0.5 | p = 1 |

|---|---|---|---|---|---|

| 0.00001 | 0.204288 | 0.213588 | 0.294853 | 0.653895 | 1.102035 |

| 0.00002 | 0.204267 | 0.213561 | 0.294815 | 0.653866 | 1.101813 |

| 0.00003 | 0.204315 | 0.213606 | 0.294855 | 0.653910 | 1.101784 |

| 0.00004 | 0.204300 | 0.213590 | 0.294824 | 0.653785 | 1.101692 |

| 0.00005 | 0.204290 | 0.213585 | 0.294814 | 0.653770 | 1.101664 |

| 0.00006 | 0.204308 | 0.213605 | 0.294846 | 0.653758 | 1.101594 |

| 0.00007 | 0.204215 | 0.213508 | 0.294745 | 0.653639 | 1.101447 |

| 0.00008 | 0.204246 | 0.213538 | 0.294775 | 0.653639 | 1.101449 |

| 0.00009 | 0.204202 | 0.213494 | 0.294728 | 0.653597 | 1.101359 |

| 0.00010 | 0.204204 | 0.213496 | 0.294730 | 0.653582 | 1.101327 |

| 0.00025 | 0.204190 | 0.213481 | 0.294694 | 0.653480 | 1.101537 |

| 0.00050 | 0.204190 | 0.213463 | 0.294670 | 0.653546 | 1.101366 |

| 0.00075 | 0.204139 | 0.213415 | 0.294603 | 0.653419 | 1.101524 |

| 0.00100 | 0.204116 | 0.213410 | 0.294581 | 0.653386 | 1.101466 |

| 0.00125 | 0.204176 | 0.213460 | 0.294605 | 0.653385 | 1.101581 |

| 0.00150 | 0.204250 | 0.213541 | 0.294654 | 0.653534 | 1.101315 |

| 0.00175 | 0.204008 | 0.213292 | 0.294419 | 0.653255 | 1.101204 |

| 0.00200 | 0.203948 | 0.213211 | 0.294350 | 0.653216 | 1.101366 |

| 0.00225 | 0.203975 | 0.213256 | 0.294402 | 0.653313 | 1.101445 |

| 0.00250 | 0.203935 | 0.213202 | 0.294353 | 0.653276 | 1.101437 |

| 0.00275 | 0.203864 | 0.213140 | 0.294315 | 0.653062 | 1.101354 |

| 0.00300 | 0.203787 | 0.213073 | 0.294241 | 0.653030 | 1.101368 |

| 0.00325 | 0.203804 | 0.213083 | 0.294250 | 0.653086 | 1.101368 |

| 0.00350 | 0.203812 | 0.213082 | 0.294240 | 0.653140 | 1.101335 |

| 0.00375 | 0.203795 | 0.213068 | 0.294198 | 0.653077 | 1.101293 |

| 0.00400 | 0.203949 | 0.213209 | 0.294330 | 0.653355 | 1.101400 |

| Note: MSE = Mean squared error; CES = Current Employment Statistics. Source: U.S. Bureau of Labor Statistics. | |||||

It is worth explicitly tracing the effect of increasing ![]() while holding

while holding ![]() constant. When

constant. When ![]() is held constant, an increase in

is held constant, an increase in ![]() implies a fall in

implies a fall in ![]() . In both figures 1 and 3, this would be represented by both a drop to a lower curve and a movement to the right. We therefore find that, for a given precision of the QCEW and other things the same, the less precise the CES estimator, the greater both the relative and absolute gains offered by the proposed benchmark estimator.

. In both figures 1 and 3, this would be represented by both a drop to a lower curve and a movement to the right. We therefore find that, for a given precision of the QCEW and other things the same, the less precise the CES estimator, the greater both the relative and absolute gains offered by the proposed benchmark estimator.

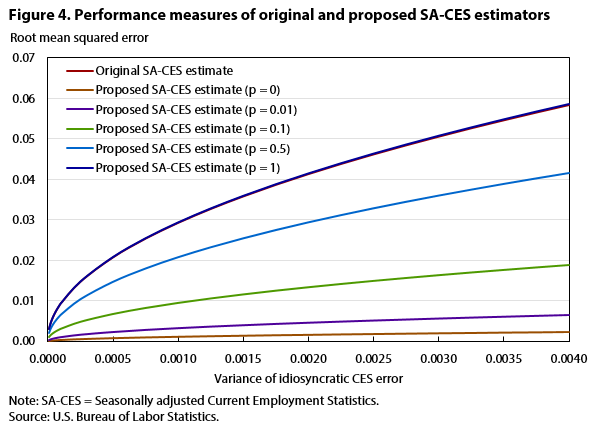

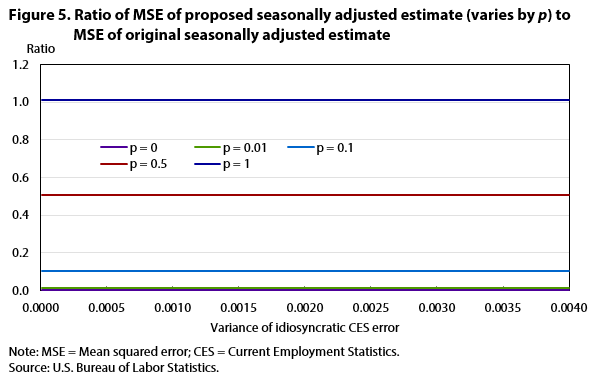

Seasonally adjusted estimates are often of greater analytical interest than nonseasonally adjusted estimates. Figures 4 and 5 summarize the performance of the proposed estimator when it is seasonally adjusted. The curves in figure 4 have the same general shape as those in figure 1, and the curves in figure 5 have the same shape as those in figure 3.

| Variance of idiosyncratic CES error | Original SA-CES estimate | Proposed SA-CES estimate (p = 0) | Proposed SA-CES estimate (p = 0.01) | Proposed SA-CES estimate (p = 0.1) | Proposed SA-CES estimate (p = 0.5) | Proposed SA-CES estimate (p = 1) |

|---|---|---|---|---|---|---|

| 0.00001 | 0.002915 | 0.000114 | 0.000319 | 0.000937 | 0.002075 | 0.002933 |

| 0.00002 | 0.004123 | 0.000162 | 0.000451 | 0.001325 | 0.002934 | 0.004147 |

| 0.00003 | 0.005049 | 0.000198 | 0.000552 | 0.001622 | 0.003593 | 0.005079 |

| 0.00004 | 0.005830 | 0.000229 | 0.000638 | 0.001873 | 0.004149 | 0.005865 |

| 0.00005 | 0.006518 | 0.000256 | 0.000713 | 0.002094 | 0.004639 | 0.006557 |

| 0.00006 | 0.007140 | 0.000280 | 0.000781 | 0.002294 | 0.005082 | 0.007182 |

| 0.00007 | 0.007712 | 0.000302 | 0.000844 | 0.002478 | 0.005488 | 0.007757 |

| 0.00008 | 0.008245 | 0.000323 | 0.000902 | 0.002649 | 0.005867 | 0.008293 |

| 0.00009 | 0.008745 | 0.000343 | 0.000957 | 0.002809 | 0.006223 | 0.008796 |

| 0.00010 | 0.009218 | 0.000362 | 0.001008 | 0.002961 | 0.006560 | 0.009272 |

| 0.00025 | 0.014576 | 0.000572 | 0.001595 | 0.004682 | 0.010370 | 0.014659 |

| 0.00050 | 0.020611 | 0.000808 | 0.002256 | 0.006621 | 0.014666 | 0.020731 |

| 0.00075 | 0.025246 | 0.000990 | 0.002763 | 0.008107 | 0.017960 | 0.025389 |

| 0.00100 | 0.029151 | 0.001143 | 0.003190 | 0.009362 | 0.020739 | 0.029318 |

| 0.00125 | 0.032592 | 0.001279 | 0.003568 | 0.010467 | 0.023187 | 0.032777 |

| 0.00150 | 0.035702 | 0.001409 | 0.003909 | 0.011466 | 0.025402 | 0.035904 |

| 0.00175 | 0.038562 | 0.001521 | 0.004220 | 0.012384 | 0.027438 | 0.038781 |

| 0.00200 | 0.041222 | 0.001626 | 0.004509 | 0.013241 | 0.029333 | 0.041459 |

| 0.00225 | 0.043722 | 0.001729 | 0.004785 | 0.014045 | 0.031113 | 0.043971 |

| 0.00250 | 0.046085 | 0.001826 | 0.005046 | 0.014805 | 0.032797 | 0.046350 |

| 0.00275 | 0.048333 | 0.001914 | 0.005294 | 0.015527 | 0.034395 | 0.048611 |

| 0.00300 | 0.050482 | 0.002001 | 0.005530 | 0.016219 | 0.035926 | 0.050774 |

| 0.00325 | 0.052543 | 0.002084 | 0.005756 | 0.016881 | 0.037392 | 0.052848 |

| 0.00350 | 0.054529 | 0.002163 | 0.005973 | 0.017519 | 0.038804 | 0.054848 |

| 0.00375 | 0.056444 | 0.002237 | 0.006184 | 0.018134 | 0.040166 | 0.056777 |

| 0.00400 | 0.058295 | 0.002315 | 0.006389 | 0.018729 | 0.041485 | 0.058639 |

| Note: SA-CES = Seasonally adjusted Current Employment Statistics. Source: U.S. Bureau of Labor Statistics. | ||||||

| Variance of idiosyncratic CES error | p = 0 | p = 0.01 | p = 0.1 | p = 0.5 | p = 1 |

|---|---|---|---|---|---|

| 0.00001 | 0.001534 | 0.011968 | 0.103237 | 0.506510 | 1.012153 |

| 0.00002 | 0.001535 | 0.011972 | 0.103225 | 0.506495 | 1.011971 |

| 0.00003 | 0.001540 | 0.011973 | 0.103234 | 0.506528 | 1.012037 |

| 0.00004 | 0.001541 | 0.011974 | 0.103235 | 0.506506 | 1.012021 |

| 0.00005 | 0.001541 | 0.011974 | 0.103233 | 0.506514 | 1.011936 |

| 0.00006 | 0.001541 | 0.011974 | 0.103225 | 0.506484 | 1.011767 |

| 0.00007 | 0.001538 | 0.011965 | 0.103206 | 0.506449 | 1.011703 |

| 0.00008 | 0.001539 | 0.011965 | 0.103206 | 0.506447 | 1.011717 |

| 0.00009 | 0.001540 | 0.011969 | 0.103212 | 0.506462 | 1.011763 |

| 0.00010 | 0.001540 | 0.011968 | 0.103212 | 0.506432 | 1.011765 |

| 0.00025 | 0.001539 | 0.011970 | 0.103181 | 0.506152 | 1.011421 |

| 0.00050 | 0.001538 | 0.011985 | 0.103184 | 0.506320 | 1.011678 |

| 0.00075 | 0.001536 | 0.011976 | 0.103126 | 0.506090 | 1.011361 |

| 0.00100 | 0.001537 | 0.011977 | 0.103136 | 0.506138 | 1.011490 |

| 0.00125 | 0.001541 | 0.011982 | 0.103139 | 0.506136 | 1.011385 |

| 0.00150 | 0.001558 | 0.011985 | 0.103143 | 0.506233 | 1.011348 |

| 0.00175 | 0.001555 | 0.011977 | 0.103134 | 0.506274 | 1.011391 |

| 0.00200 | 0.001557 | 0.011965 | 0.103177 | 0.506355 | 1.011532 |

| 0.00225 | 0.001564 | 0.011975 | 0.103191 | 0.506388 | 1.011423 |

| 0.00250 | 0.001569 | 0.011989 | 0.103204 | 0.506465 | 1.011534 |

| 0.00275 | 0.001568 | 0.011995 | 0.103202 | 0.506411 | 1.011537 |

| 0.00300 | 0.001572 | 0.012000 | 0.103223 | 0.506459 | 1.011602 |

| 0.00325 | 0.001572 | 0.012000 | 0.103221 | 0.506440 | 1.011643 |

| 0.00350 | 0.001574 | 0.011999 | 0.103220 | 0.506405 | 1.011734 |

| 0.00375 | 0.001570 | 0.012002 | 0.103217 | 0.506386 | 1.011834 |

| 0.00400 | 0.001577 | 0.012010 | 0.103221 | 0.506430 | 1.011837 |

| Note: MSE = Mean squared error; CES = Current Employment Statistics. Source: U.S. Bureau of Labor Statistics. | |||||

We propose replacing the annual benchmarking procedure currently in place for national estimates with one based on quarterly benchmarking of seasonally adjusted CES estimates to the seasonally adjusted QCEW. The proposed estimator performs well when applied to the national all-employee series. The gain from more frequent updating is especially large when monthly CES errors are positively correlated, as was the case at the beginning of the Great Recession.

The results of our simulation exercise apply equally to any series, be it total or industry, or national or local. We used the simulation exercise to compare the performance of the proposed quarterly benchmarking estimate with the initial CES estimate. The results demonstrate that, even when we control for a loss of precision in the estimation of seasonal factors, the greater the variance of the CES estimate, the greater both the relative and absolute gains provided by the proposed quarterly benchmarking procedure. The CES industry and area estimates have a greater variance than the national all-employee series. Therefore, one can reasonably argue that there is an even stronger case for applying the proposed quarterly benchmarking procedure to the industry and area estimates.

The discussion in this article has focused mostly on the national CES estimates. However, the methodology can also be applied to the state and area estimates. As noted in the introductory discussion, state and metropolitan area estimates are currently benchmarked annually by replacing sample-based estimates with all available months of population data. An undesirable feature of the resulting hybrid series is the confounding of QCEW and CES seasonality. Difficulties remain in seasonally adjusting the hybrid series.

As originally noted by Franklin D. Berger and Keith R. Phillips, it is best to seasonally adjust the QCEW and CES components of the hybrid series separately.19 However, as discussed by others, a problem arises at the seam where the QCEW data end and the CES data begin, because differences in the seasonal factors at the seam will affect the growth rate of the hybrid series at the seam point.20 An advantageous feature of our proposed methodology is that it produces a series that has CES seasonality throughout and can therefore be seasonally adjusted without undue complication.21

Berger, Franklin D., and Keith R. Phillips. “Reassessing Texas employment growth.” The Southwest Economy (Federal Reserve Bank of Dallas, July/August 1993), pp. 1–3.

Berger, Franklin D., and Keith R. Phillips. “Solving the mystery of the disappearing January blip in state employment data.” Economic Review (Federal Reserve Bank of Dallas, second quarter 1994).

Dey, Matthew, and Mark A. Loewenstein. “Quarterly benchmarking for the Current Employment Survey.” Working Paper 496 (U.S. Bureau of Labor Statistics, June 2017).

Groen, Jeffrey A. “Seasonal differences in employment between survey and administrative data.” Working Paper 443 (U.S. Bureau of Labor Statistics, 2011).

Manning, Christopher D., and John R. Stewart. “Benchmarking the Current Employment Statistics national estimates.” Monthly Labor Review, October 2017, https://doi.org/10.21916/mlr.2017.25.

Mueller, Kirk J. “Benchmarking the Current Employment Statistics state and area estimates.” Monthly Labor Review, November 2017, https://doi.org/10.21916/mlr.2017.26.

Phillips, Keith R., and Jiango Wang. “Seasonal adjustment of state and metro CES jobs data.” Working Paper 1505 (Federal Reserve Bank of Dallas, 2015).

Robertson, Kenneth. “Benchmarking the Current Employment Statistics survey: perspectives on current research.” Monthly Labor Review, November 2017, https://doi.org/10.21916/mlr.2017.27.

Scott, Stuart, George Stamas, Thomas J. Sullivan, and Paul Chester. “Seasonal adjustment of hybrid economic time series.” Proceedings of the Section on Survey Research Methods (American Statistical Association, 1994), https://www.bls.gov/osmr/research-papers/1994/pdf/st940350.pdf.

Walstrum, Thomas. “‘Early benchmarking’ the state payroll employment survey.” Employment, Midwest, Seventh District (Federal Reserve Bank of Chicago, June 2015).

ACKNOWLEDGMENT: We thank Steve Mance, Steve Miller, Kirk Mueller, and Ken Robertson for providing helpful comments in the drafting of this article.

Mark A. Loewenstein, and Matthew Dey, "A quarterly benchmarking procedure for the Current Employment Statistics program," Monthly Labor Review, U.S. Bureau of Labor Statistics, November 2017, https://doi.org/10.21916/mlr.2017.28

1 For more background on the CES, see Kenneth Robertson, “Benchmarking the Current Employment Statistics survey: perspectives on current research,” Monthly Labor Review, November 2017, https://doi.org/10.21916/mlr.2017.27.

2 In addition to the authors of this article, the BLS benchmarking team includes Greg Erkens, Larry Huff, Christopher Manning, Kirk Mueller, Steven Mance, Kenneth Robertson, and David Talan.

3 For further discussion of this issue, see Robertson, “Benchmarking the Current Employment Statistics survey.”

4 With the aim of making this article accessible to a larger audience, we have omitted a number of technical details and nearly all mathematical derivations. These can be found in our more technical working paper, which provides the basis for the present article. See Matthew Dey and Mark A. Loewenstein, “Quarterly benchmarking for the Current Employment Survey,” Working Paper 496 (U.S. Bureau of Labor Statistics, June 2017).

5 For a more detailed description, see Christopher D. Manning and John R. Stewart, “Benchmarking the Current Employment Statistics national estimates,” Monthly Labor Review, October 2017, https://doi.org/10.21916/mlr.2017.25.

6 New birth–death factors are also calculated with the use of the more recent data. For more information on the

7 The birth–death model corrects for business births and deaths that are not captured in the CES survey. For an explanation of the model, see “CES net birth/death model,” Current Employment Statistics—CES (national) (U.S. Bureau of Labor Statistics), https://www.bls.gov/web/empsit/cesbd.htm.

8 More precisely, ![]() is the ratio of the CES employment estimate in month

is the ratio of the CES employment estimate in month ![]() to the CES employment estimate in month

to the CES employment estimate in month ![]() . When employment growth is positive,

. When employment growth is positive, ![]() is greater than 1. When employment growth is negative,

is greater than 1. When employment growth is negative, ![]() is less than 1. The definition of

is less than 1. The definition of ![]() is similar to that of all other growth rates presented in our analysis.

is similar to that of all other growth rates presented in our analysis.

9 Not only does QCEW employment information become available on a quarterly basis, but the information in the last month of a quarter is more reliable than the information during the first 2 months of a quarter.

10 A preliminary QCEW employment estimate is available 3 months earlier. It is quite possible that little accuracy is lost in using the preliminary QCEW estimates rather than the final QCEW estimates. We plan to explore this possibility in future work.

11 Note that εm > 0 if the CES employment growth estimate is too high, and εm < 0 if the CES employment growth estimate is too low.

12 The assumption that true employment levels can be observed quarterly helps simplify the exposition in this section, allowing us to focus on essentials. We will return to this point later, when we discuss our recommended benchmarking methodology.

13 This statement is true if the quarterly CES errors are distributed independently or are positively correlated. With sufficiently strong negative correlation, it is possible for the variance of the revised estimate to actually exceed the variance of the initial estimate, but this case is extremely unlikely. In contrast, there have been times during which the quarterly CES errors have, in fact, been positively correlated. A prime example is the onset of the Great Recession, when CES understated employment losses.

14 The discussion abstracts from the possibility that errors in the CES are negatively correlated. If that were the case, there would be an even greater tendency for the sum of the absolute values of the quarterly March revisions to exceed the absolute value of the annual March revision. This situation is unlikely to occur in practice. Even if it does, it would not mean that the quarterly revisions do not improve the employment estimates throughout the year.

15 See, for example, Franklin D. Berger and Keith R. Phillips, “Reassessing Texas employment growth,” The Southwest Economy (Federal Reserve Bank of Dallas, July/August 1993), pp. 1–3; Berger and Phillips, “Solving the mystery of the disappearing January blip in state employment data,” Economic Review (Federal Reserve Bank of Dallas, second quarter 1994); and Jeffrey A. Groen, “Seasonal differences in employment between survey and administrative data,” Working Paper 443 (U.S. Bureau of Labor Statistics, 2011).

16 The proposed revision procedure uses only QCEW employment estimates for the final month of any quarter, because these estimates are more reliable than those for the first 2 months of any quarter. Evidence for this is provided by the existence of “seam effects” across quarters: monthly employment changes between the last month of a quarter and the first month of the next quarter are typically larger in absolute value and less likely to be zero than those between the months within a quarter. This suggests that employers filling out the UI reports underlying the QCEW have a tendency to copy backward from the third month of the quarter to the first and second months. See Groen, “Seasonal differences in employment between survey and administrative data.”

Note that ![]() , the quarterly growth rate in the benchmarked estimate, is equal to the product of the three monthly growth rates:

, the quarterly growth rate in the benchmarked estimate, is equal to the product of the three monthly growth rates: ![]() =

= ![]() . Performing this multiplication with the use of equation (7), one obtains

. Performing this multiplication with the use of equation (7), one obtains ![]() , where

, where ![]() =

= ![]() is the original CES estimate of the growth rate in quarter

is the original CES estimate of the growth rate in quarter ![]() .

.

17 Deviating from current practice, we have estimated the seasonal factors concurrently. This distinction is not central to our analysis.

18 We have simplified the analysis by assuming that seasonality does not change over time. In future work, we plan to relax this assumption.

19 Berger and Phillips, “Reassessing Texas employment growth,” pp. 1–3.

20 See Stuart Scott, George Stamas, Thomas J. Sullivan, and Paul Chester, “Seasonal adjustment of hybrid economic time series,” Proceedings of the Section on Survey Research Methods (American Statistical Association, 1994), https://www.bls.gov/osmr/research-papers/1994/pdf/st940350.pdf; and Keith R. Phillips and Jiango Wang, “Seasonal adjustment of state and metro CES jobs data,” Working Paper 1505 (Federal Reserve Bank of Dallas, 2015).

21 In fact, the benchmarked quarterly growth rates are essentially the QCEW growth rates with the CES seasonality. Of course, a second advantage of our proposed methodology is that QCEW estimates are incorporated sooner. The Federal Reserve Bank of Dallas incorporates the QCEW information as soon as the QCEW data become available, but, like BLS, does so by producing a hybrid series. In a recent article, Thomas Walstrum examines the effect of applying this early benchmarking procedure to update the CES employment estimates for the five states in the Seventh Federal Reserve District. See Thomas Walstrum, “‘Early benchmarking’ the state payroll employment survey,” Employment, Midwest, Seventh District (Federal Reserve Bank of Chicago, June 2015).