An official website of the United States government

United States Department of Labor

United States Department of Labor

Authors: Grayson Armstrong, Gray Jones, Tucker Miller, and Sharon Pham

This paper was published as part of the Consumer Expenditure Surveys Program Report Series.

Overview

Summary of changes to data collection during COVID-19

Final disposition rates of eligible sample units (Diary and Interview Surveys)

Records Use (Interview Survey)

Expenditure edit rates (Diary and Interview Surveys)

Income imputation rates (Diary and Interview Surveys)

Respondent burden (Interview Survey)

Information book use (Interview and Diary Surveys)

Survey Time (Interview and Surveys)

Survey Mode(Interview and Diary Surveys)

The Reference Guide accompanies the annual Data Quality Profile (DQP) for the Consumer Expenditure Surveys (CE). It contains supplemental material on metric description and documentation that CE data users can reference when reading the annual DQP. The metrics produced for the DQP are outlined in table 1, as well as the error dimensions that a metric is intended to monitor the applicable CE Interview Survey or Diary Survey and the time series available on the metric.

Table 1. Metrics by error dimensions, survey, and DQP production years

|

Metrics |

Total Survey Error Dimensions associated with the metric |

Survey |

Time series |

||||

|

Measurement |

Nonresponse |

Processing |

Cost |

Interview |

Diary |

||

|

1. Final disposition rates of eligible units

|

|

a |

|

|

a |

a |

2010 - present |

|

2. Records use

|

a |

|

|

|

a* |

|

2016 - present |

|

3. Expenditure edit rates |

a |

a |

a |

|

a |

a |

2010 - present |

|

4. Income imputation rates |

a |

a |

a |

|

a |

a |

2010 - present |

|

5. Respondent burden |

a |

a |

a |

|

a |

|

2017 - present |

|

6. Information book use |

a |

|

|

|

a* |

a |

2016 - present |

|

7. Survey mode |

a |

|

|

|

a* |

a+ |

2016 - present |

|

8. Survey time |

a |

|

|

a |

a* |

a |

2016 - present |

|

* These CE Interview Survey metrics are conditioned by wave. + This CE Diary Survey metric is new to the CE Data Quality Profile, and dates back to 2020 quarter 2. |

|||||||

This section provides a brief overview of the changes in data collection that occurred in response to the COVID 19 pandemic and the resulting public safety guidelines.

March 2020 - The Census Bureau suspends all in-person interviews.

July 2020 - Interviewers resumed in-person interviews in some locations. Since then, in-person interviews have expanded as local regulations allowed.

July 2020 - An online mode became available in the CE Diary Survey due to the pandemic but was not officially implemented into CE production until July of 2022.

April 2021 - The Bureau of Labor Statistics discontinued in-house COVID-19 nonresponse classification, and the Census Bureau will now treat COVID-19 related cases as illness related refusals.

The unit of observation for the CE is the CU, so response and nonresponse rates are computed at the CU level. The CE adopts the Census Bureau's categorization of eligible CUs who do not respond to the survey as a "Type A nonresponse." Type A nonresponse is differentiated into subcategories of reasons for nonresponse: "Noncontact" when the interviewer is unable to contact an eligible member of the CU; "Refusal" when the contacted CU member refuses; and "Other nonresponse" for miscellaneous other reasons. Among the "Type A nonresponse" reasons is a minimal expenditure edit check performed by the CE that can change an interviewer-coded "completed interview" for a CU to "non-respondent"; this type of edit is referred to as nonresponse reclassification. A mapping of the CE to American Association for Public Opinion Research (AAPOR) final disposition codes for in-person, household surveys is presented in this section.

Response and nonresponse rates are measures of cooperation levels in a survey. Since not all eligible sample units will be available or agree to participate in the survey, there will be some nonresponse to the survey request. Characteristics of non-respondents may differ from respondents, and if these characteristics correlate with their expenditures, their omission from the survey may result in biased estimates. While weighting adjustments may reduce bias, the effectiveness of this approach depends on the availability and quality of variables used in the weighting, so concerns about bias persist. A single, survey-level measure, such as a survey response rate, in itself is an inadequate measure of nonresponse error. Nevertheless, higher response rates are preferred in the absence of other indicators of nonresponse bias.

The nonresponse reclassification check is conducted in both the CE Interview Survey and CE Diary Survey. The nonresponse reclassification rates can serve as an indicator of the potential for nonresponse bias because the minimal expenditure edit (which triggers reclassification) converts these respondents to non-respondents. If those reclassified as non-respondents are systematically different from respondents in their spending patterns, nonresponse bias will result. All else equal, lower reclassification rates are desired.

Response rates can be reported unweighted or weighted. Unweighted response rates provide an indication of the proportion of the sample that resulted in useable information to produce estimates. They also serve as a means of monitoring the progress of fieldwork and for identifying problems with nonresponse that can be addressed during fieldwork operations. Weighted response rates provide an indication of the proportion of the survey population for which useable information is available, since the weights allow for inference of the sample to the population. The weights typically used are base weights (the inverse probability of selecting the sample units). Weighted and unweighted response rates for CE do not meaningfully differ, so only unweighted rates are presented in the DQP.

Each survey wave is treated independently (that is, as an independent CU) in the computation of the CE Interview Survey response rates, and each diary week is treated independently for the CE Diary Survey response rates. In the metric tables for Final Disposition, the number of eligible CUs is the count of CU addresses eligible for the interview in the collection period, not the count of unique CUs.

Response and nonresponse rates

Table 3 shows the mapping of the CE final disposition codes to the AAPOR final disposition codes for in-person household survey: [AAPOR Standard Definitions (2016)].

Table 3. Mapping of CE final disposition codes with AAPOR codes

|

AAPOR final disposition codes for in-person, household survey |

CE Interview Survey Final Disposition Codes |

CE Diary Survey Final Disposition Codes

|

|

|

|

|

|

|

|

1. Interview |

1.0 |

|

|

|

Complete (I) |

1.1 |

201 Completed interview |

201 Completed interview |

|

Partial (P) |

1.2 |

203 Sufficient partial (through Section 20, no further follow-up) |

217 Interview- Temporarily Absent[1]

|

|

2. Eligible, Non-Interview |

2.0 |

|

|

|

Refusal and break-offs (R) |

2.10 |

|

|

|

Refusals |

2.11 |

321 Refused, hostile(A) 322 Refused, time(A) 323 Refused, language (A) 324 Refused, other (A) |

321 Refused, hostile(A) 322 Refused, time(A) 323 Refused, language (A) 324 Refused, other - specify (A) |

|

Household-level refusal |

2.111 |

n/a |

n/a |

|

Known respondent refusal |

2.112 |

n/a |

n/a |

|

Break-off |

2.12 |

215 Insufficient partial (A) |

|

|

Non-contact (NC) |

2.20 |

|

|

|

No one at residence |

2.24 |

216 No one home |

216 No one home |

|

Respondent away/unavailable |

2.25 |

217 Temporarily absent |

|

|

Other (O) |

2.30 |

324 Refused, other |

324 Refused, other |

|

Unable to enter building/reach housing unit |

2.23 |

219 Other (A) |

219 Other (A) |

|

Dead |

2.31 |

219 Other (A) |

219 Other (A) |

|

Physically or mentally unable/incompetent |

2.32 |

219 Other (A) |

219 Other (A) |

|

Language (did not refuse) |

2.33 |

219 Other (A) |

219 Other (A) |

|

Household-level language problem |

2.331 |

219 Other (A) |

219 Other (A) |

|

Respondent language problem |

2.332 |

219 Other (A) |

219 Other (A) |

|

No interviewer available for needed language |

2.333 |

219 Other (A) |

219 Other (A) |

|

Miscellaneous |

2.36 |

219 Other (A) |

219 Other (A) 320 Second week diary picked up too early (A) 325 Diary placed too late (A) 326 Blank diary, majority of items recalled w/o receipts (A) |

|

3. Unknown eligibility, non-interview[2] |

3.0 |

|

|

|

Unknown if housing unit occupied (UH) |

3.10 |

n/a |

n/a |

|

Not attempted or worked |

3.11 |

|

|

|

Unable to reach/unsafe area |

3.17 |

|

|

|

Unable to locate address |

3.18 |

258 Unlocated sample address (C): Treated as ineligible for CE |

258 Unlocated sample address (C): Treated as ineligible for CE |

|

Housing unit/Unknown if eligible respondent (UO) |

3.20 |

n/a |

n/a |

|

No screener completed |

3.21 |

|

|

|

Other |

3.90 |

|

|

|

4. Not Eligible |

4.0 |

|

|

|

Out of sample |

4.10 |

|

|

|

Not a housing unit |

4.50 |

228 Unfit, to be demolished (B) 229 Under construction, not ready (B) 231 Unoccupied tent/trailer site (B) 232 Permit granted, construction not started (B) 240 Demolished (C) 241 House/trailer moved (C) 243 Converted to permanent nonresidential (C)

|

228 Unfit, to be demolished (B) 229 Under construction, not ready (B) 231 Unoccupied tent/trailer site (B) 232 Permit granted, construction not started (B) 240 Demolished (C) 241 House/trailer moved (C) 243 Converted to permanent nonresidential (C)

|

|

Business, government office, other organization |

4.51 |

243 Converted to permanent nonresidential (C) |

243 Converted to permanent nonresidential (C) |

|

Institution |

4.52 |

n/a |

n/a |

|

Group quarters |

4.53 |

252 Located on military base or post (C) |

252 Located on military base or post (C) |

|

Vacant housing unit |

4.60 |

226 Vacant for rent (B) 331 Vacant for sale (B) 332 Vacant other (B) 341 CU moved (C) 342 CU merged with another CE CU within the same address (C) |

226 Vacant for rent (B) 331 Vacant for sale (B) 332 Vacant other (B) 341 CU moved (C) 342 CU merged with another CE CU within the same address (C) |

|

Regular, Vacant residences |

4.61 |

|

|

|

Seasonal/Vacation/Temporary residence |

4.62 |

332 Vacant other (B) 225 Occupied by persons with URE (B) |

332 Vacant other (B) 225 Occupied by persons with URE (B) |

|

Other |

4.63 |

233 Other (B) 244 Merged units within same structure (C) 245 Condemned (C) 247 Unused serial number or listing sheet (C) 248 Other (C) 259 Unit does not exist or is out of scope 290 Spawned in error (C) |

233 Other (B) 244 Merged units within same structure (C) 245 Condemned (C) 247 Unused serial number or listing sheet (C) 248 Other (C) 259 Unit does not exist or is out of scope

|

|

No eligible respondent |

4.70 |

224 All persons under 16 (B) |

224 All persons under 16 (B) |

|

Quota filled |

4.80 |

n/a |

n/a |

|

Note: Census Bureau non-interview categories: (A) = Type A, (B) = Type B, (C) = Type C

|

|||

In the following definitions for eligible sample, response rate, refusal rate, noncontact rate, and other nonresponse rate, the formula contain the variables I, P, R, NC, O, which refer to groupings of final disposition codes that are defined in table 3 above.

Eligible Sample (denominator for response, refusal, noncontact, and other nonresponse rates)

= I + P + R + NC + O

The total number of eligible units - those who completed interviews (I, P), plus nonresponse due to refusals, non-contact, or other reasons (R, NC, O). This excludes any address that was sampled and ineligible (for example, an abolished household at a sampled address or a commercial business at a sampled address).

Response Rate (AAPOR definition RR2)

= (I + P) / (I + P + R + NC + O)

Defined as total number of good and partial interviews (interviews that provide data for use in the production tables), divided by the eligible sample. For the CE, unknown eligible housing units are coded as "Eligible non-interview" (i.e. Type A).

Refusal Rate (AAPOR definition REF3)

= R / (I + P + R + NC + O)

Defined as total number of eligible nonresponses that were refused or started, but not completed, divided by the eligible sample. Refused interviews includes refusals due to time, language problems, and other types of refusals.

Noncontact Rate (1 - AAPOR definition CON3)

= NC / (I + P + R + NC + O)

Defined as total number of eligible nonresponses, due to inability to make contact with an eligible sample unit member, divided by the eligible sample.

Other Nonresponse Rate

= O / (I + P + R + NC + O)

Defined as total number of eligible nonresponses, due to reasons other than refusal and noncontact with an eligible sample unit member, divided by the eligible sample.

The sum of Response Rate, Refusal Rate, Noncontact Rate, and Other Nonresponse Rate comprise 100 percent of the universe of eligible sample units. In addition to these four rates, we also report on the Nonresponse Reclassification rate, which is a subset of Other Nonresponse cases.

Nonresponse reclassification rate

Defined as the total number of interviews that were changed from completed to a Type A non-interview based on a review of total expenditures (CE's Minimal Expenditure Edit routine) and other information about the CU, divided by the eligible sample. Reclassification of an interview from completed to Type A non-interview is based on analysts' expert judgement when there is evidence that what is reported is not an accurate representation of the CU's spending. This review process differs between the CE Interview Survey and the CE Diary Survey.

For the CE Interview Survey

Only a small number of completed interviews are reclassified as Type A non-interviews in the CE Interview Survey. Cases where the CU reports fewer than $100 in total expenditures or fewer than $300 in total expenditures and a total interview time of less than 15 minutes are output for review. Review of these cases is done holistically, and the requirement for the nonresponse reclassification review offers the following guidance: "low expenditure totals and low interview time contribute to reclassification. Phone interviews, converted refusal cases, hostile respondents in previous interviews, proxy respondents, and refused or unknown demographic information should also contribute towards reclassifying a case to a Type A non-interview." Additionally, analysts have access to interviewer notes made through the interview that can provide more detail about the case, such as respondent reactions to questions.

For the CE Diary Survey

A larger number of completed interviews are reclassified in the CE Diary Survey. Consistent with this higher incidence of reclassification, the nonresponse reclassification procedure is rule-driven and requires no analyst judgement. The rules for the CE Diary Survey nonresponse reclassification are as follows:

· Zero items in both weeks: Diaries with zero items reported in both weeks of the survey; OR Diaries with zero items reported and the diary from the other diary week is a Type A, B, or C non-interview.

· Zero items in one week: Diaries with zero items reported in one week and the diary from the other diary week has > 10 items reported in FDB with the total cost of these items being <= $50 OR Diaries with zero items reported and the diary from the other diary week has <= 10 items reported in FDB with the total cost of these items being <= $50 and the CU does not live in a rural area or a college dormitory, and no members of the CU were away during the reference period.

· Single member CUs: Diaries where there is one person in the CU and the total amount spent on food (at home and away from home) is <= $5 in the current week and <= $15 in the other diary week, and the number of items reported for non-food items in the current week is < 4 or the total cost of items reported for non-food items in the current week is < $30.

· Two or Three member CUs: Diaries where there are 2 or 3 members in the CU the total amount spent on food (at home and away from home) is <= $10 in the current week and <= $20 in the other diary week and the number of items reported of non-food items in the current week is < 4 or the total cost of non-food items reported in the current week is < $30.

· Four or more members: Diaries where there are four or more CU members and the total amount spent on food (at home and away from home) is <= $20 in the current week and <= $30 in the other diary week and the number of items reported of non-food items in the current week is < 4 or the total cost of non-food items reported in the current week is < $30

Responses to survey questions about spending that are based on expenditure records result in higher reporting accuracy and lower measurement error, so a higher prevalence of records use is desirable. Using records is optional for respondents, so it is likely that respondents who do choose to use any records at all - even if only for occasional reference on an as needed basis - are more engaged than those respondents who choose not to consult records. In addition, it is plausible that "no or very few records were used" would be more salient in an interviewer's recollection of the interview than the varying extent of records used in the other response options. Note that respondents' use of records is reported by interviewers based on their subjective judgement at the end of the interview. For interviews conducted by telephone, determining whether a respondent used records can be even more subjective. In addition, interviewers need not respond to the question to close out the case, hence the high incidence of item nonresponse for the records use question.

This metric is based on the overall records used question asked of the interviewer at the end of the CE Interview Survey, and its response options are mapped into metric subgroups as shown in table 4.CE Interview Survey records use question: "In the interview, how often did the respondent consult records?"

Table 4. Record use mapping

|

CE Interview Survey records use response options |

Mapping to metric subgroups |

|

1. Almost always |

Records used |

|

2. Most of the time |

|

|

3. Occasionally or used at least 1 record |

|

|

4. Never, no records used |

None |

|

(nonresponse) |

Missing |

The metric tabulation is conditioned on wave; the wave subgroups are wave 1, waves 2 and 3, and wave 4.

At the completion of an interview, data from the interviewer's laptop are transmitted to the Census Master Control System. The Census Bureau's Demographics Surveys Division performs some preliminary processing and reformatting of the data before transmitting the data to BLS on a monthly basis. At BLS, a series of automated and manual edits are applied to the data in order to ensure consistency, fill in missing information, and to correct errors in the collected data. (For more description about the data collection and processing for the CE, see Handbook of Methods: Consumer Expenditure Survey).

Edits are defined as any changes in the data made during processing, with the exception of calculations (e.g. conversion of weekly value to quarterly value) and top-coding/suppression. Calculations are not considered edits as they do not require BLS to make any assumptions about a response. Top-coding and suppression are not considered edits as they only apply to the public-use microdata (PUMD) and not to the internal research files or the published tables. Imputation and allocation are the two major types of data edits performed on the Interview and Diary Surveys:

· Imputation replaces missing or invalid entries with a valid response

· Allocation edits are applied when respondents provide insufficient detail to meet tabulation requirements. For example, if a respondent provides a non-itemized overall expenditure report for the category of fuels and utilities, that overall amount will be allocated to the target items mentioned by the respondent (such as natural gas and electricity).

In addition to allocation and imputation, data are reviewed, and certain cases are manually edited by BLS analysts based on research and expert judgment. When making manual edits, BLS analysts are able to use previously reported data from the respondent, descriptions of the items reported, and interviewer notes attached to the case. It is possible for multiple edits to be applied to a single expenditure, and for the purposes of calculating expenditure edit rates this is counted as a single edited record. Non-expenditure variables may also be edited, and these edits are not currently captured in the expenditure edit rates.

The need for data imputation results from missing data (item or expense nonresponse). Thus, lower imputation rates are desirable. The need for data allocation is a consequence of responses that did not contain the required details of the item asked by the survey. Likewise, lower allocation rates are also preferred, and in general, lower data editing rates are preferred since that lowers the risk of processing error. However, imputation based on sound methodology can improve the completeness of the data and improve the overall quality of survey estimates.

Ideally, the computation of edit rates are based on the edit flag values of the expenditure variables that correspond directly to the survey questions about the expenditures. This is how edit rates are computed for CE Diary Survey. However, the CE Interview Survey has hundreds of expenditure variables spread across more than 40 data tables. To overcome this challenge, CE Interview Survey edit rates are calculated from a modified version of the interview monthly tabulation file - MTBI.

Reported expenditures

For the calculation of expenditure edit rates, a distinction is made between the set of expenditure records based on respondent reports (reported expenditure records) and the set of post-processing expenditure records used to produce official tables and released as public-use microdata (processed expenditure records). The set of reported expenditure records is smaller than the set of processed expenditure records. During BLS data editing, a single reported expenditure may be split into multiple processed expenditures if it was allocated from a single combined expense to multiple individual expenses. Calculating expenditure edit rates based on processed expenditures leads to double counting of edited expenditures since it treats any allocated record as multiple edits rather than multiple instances of a single edited expenditure. Overall, processed expenditures lead to misleadingly higher allocation rates. Additionally, reported quarterly or annual amounts can be split into multiple monthly processed records. This has the effect of counting both unedited and edited expenditures multiple times. Using reported expenditures answers how much of the collected data were edited. We provide expenditure edit rates based on reported expenditure records because we believe that this more closely matches what users have in mind when they ask "how much of the data are edited."

Expenditure edit flags: CE Interview Survey

The expenditure edit rates presented in the Data Quality Profile were calculated using reported expenditures from the internal data, however we refer exclusively to data files and variables that are available in the public-use microdata files for the convenience of data users in this Reference Guide. CE Interview Survey expenditure edits are calculated using a subset of records that were unique by the combination of three variables on the MTBI file: NEWID (the CU identifier), SEQNO (the expenditure sequence number), and EXPNAME (the source variable name). Then, the cost flag variable, COST_, is used to identify if an expenditure was edited and what type of edit was made (imputation, allocation, combination, or manual). The different types of edits (or non-edits) were identified by the flag values for the CE Interview Survey, shown in table 5.

Users attempting to calculate their own edit rates based on table 5 below should be aware that PUMD flags are less detailed than what is available internally. Thus, PUMD users will not be able to separate manually edited records from imputed or allocated records because the PUMD flags for imputation and allocation include manual edits done during these processes. Because manual updates represent a small fraction of the overall number of edits performed, this should not significantly impair users' ability to calculate edits rates in the PUMD.

Table 5. Flag values CE Interview Survey

|

CE Interview Survey Flag value (internal/PUMD) |

Flag Description |

Metric edit group |

|

0/D |

The expenditure was not flagged by Census, and not changed by BLS. Expenditures with this flag may have been reviewed by BLS, but were not changed. |

Unedited |

|

1/D |

The expenditure was flagged by Census, but was not changed by Census or BLS. |

Unedited |

|

2/F |

Manually updated by BLS. |

Manual edit |

|

3/F |

Imputed by BLS. |

Imputed |

|

4/E |

Allocated by BLS. |

Allocated |

|

5/G |

Imputed and then allocated by BLS. |

Imputed and allocated |

|

6/D |

Computed by BLS from respondent provided information. |

Unedited |

|

7/F |

Computed by BLS from imputed data. |

Imputed |

|

8/E |

Allocated by BLS and then computed from allocated data. |

Allocated |

|

9/G |

Imputed by BLS, then allocated, and computed from allocated data. |

Imputed and allocated |

|

Q/F |

Imputed manually by BLS analyst due to there being insufficient source data for automatic imputation. |

Manual edit |

|

R/E |

Allocated manually by BLS analyst due to there being insufficient source data for automatic allocation. |

Manual edit |

|

S/E |

Allocated by BLS based on fixed proportions rather than on source data. |

Allocated |

Expenditure edit flags: CE Diary Survey

Users of the public-use microdata will not be able to calculate their own edit rates from the microdata because the CE Diary Survey microdata do not contain all of the necessary variables. We subset the four EXPN files to records that were unique by the combination of two variables on the internal EXPN files: NEWID (the CU identifier) and SEQNO (the sequence number). Then, the allocation number (ALCNO) was used to determine whether a record had been allocated or not, and the cost flag variable (COST_), was used to determine if another type of edit was performed. Users of the public-use microdata will be able to determine whether a CE Diary Survey expenditure is the result of allocation using the ALLOC variable in the EXPD file, but they will not be able to identify the set of reported expenditures from the set of processed expenditures. For the difference between processed and reported expenditures see the discussion above. In addition, public-use microdata users are unable to identify the small number of expenditures that had edits other than allocation.

Income data are collected twice in CE Interview Survey - in the first and fourth waves, and once in CE Diary Survey - either with the initial placement or with the diary pickup at the end of the second week. The CE performs three types of imputations for income in the CE Interview Survey and CE Diary Survey. The first is "Model-based" imputation: when the respondent indicates an income source but fails to report an amount of income received. The second is "Bracket response" imputation: when the respondent indicates the receipt of an income source, fails to report the exact amount of income but does provide a bracket range estimate of the amount of income received. The third type of income imputation is referred to as "All valid blank conversion": when the respondent reports no receipt of income from any source but the CE imputes receipt from at least one source. Flag indicators for income imputation are described in the table below. Since the need for imputation reflects item nonresponse or that insufficient item detail was provided, lower imputation rates are desirable for lowering measurement error. However, imputation based on sound methodology can improve the completeness of the data.

Methods - Income imputation

The CE implemented multiple imputations of income data, starting with the publication of 2004 data. Prior to that, only income data collected from complete income reporters were published. However, even complete income reporters may not have provided information on all sources of income for which they reported receipt. With the collection of bracketed income data starting in 2001, this problem was reduced but not eliminated. One limitation was that bracketed data only provided a range in which income falls, rather than a precise value for that income. In contrast, imputation allows income values to be estimated when they are not reported. In multiple imputations, several estimates are made for the same CU, and the average of these estimates is published. Note that income data from the CE Diary Survey are processed in the same way as in the CE Interview Survey.

Imputation rates for income are calculated based on the internal CE data. Each survey wave is treated independently for the CE Interview Survey, and each weekly diary is treated independently for the CE Diary Survey. This means that if a respondent fails to provide income data, then every wave will have its own imputed values. Imputation rates are calculated using final income before taxes. The income is considered to be imputed if any of its summed components were imputed during processing. For example, if a respondent reports receipt of both a salary and royalties, their income will be considered imputed if either the salary or the royalties were imputed. In addition, if two household members both report having salaries, their income will be considered imputed if either member's salary is imputed. These cases are identified using the imputation indicator flag (FINCBTXI), applicable to both the CE Diary Survey and CE Interview Survey, and available in the PUMD. Any value of the flag not equal to '100' is considered imputed.

Table 6. Income Imputation flag values for CE Interview Survey and CE Diary Survey

|

Income Imputation Flag Value |

Description |

Edit classification |

|

100 |

No imputation. This would be the case only if none of the variables that are summed to get the summary variables is imputed. |

Unedited |

|

2nn |

Imputation due to invalid blanks only. This would be the case if there are no bracketed responses, and at least one value is imputed because of invalid blanks. |

Model |

|

3nn |

Imputation due to brackets only. This would be the case if there are no invalid blanks, and there is at least 1 bracketed response |

Bracket |

|

4nn |

Imputation due to invalid blanks and bracketing |

Bracket and model |

|

5nn |

Imputation due to conversion of valid blanks to invalid blanks. (Occurs only when initial values for all sources of income for the CU and each member are valid blanks.) |

All valid blank conversion (AVB) |

All Valid Blank (AVB) conversion rate

This measure quantifies the instances when all valid nonresponses (i.e., the respondent replied that the CU did not receive income from any source) are converted to invalid nonresponses, which were subsequently imputed during processing. This will be based on the indicator flag with a value of '500' or above.

The CE Interview Survey began continuously tracking perceived respondent burden in the CE Interview Survey beginning in 2017q2.[3] A caveat to the interpretation of this metric is that the burden question is asked of respondents only in the final wave (wave 4) of the CE Interview Survey, and it likely underestimates survey burden due to survivorship bias.

This metric is based on the question asked of the respondent at the end the interview. This question, its five response options, and the collapsing of these response options into three categories for the metric tabulation are shown in table 7.

CE Interview Survey respondent burden question: "How burdensome was this survey to you?"

Table 7. Respondent burden mapping

|

CE Interview Survey 2017q2 - current response options |

Mapping to metric subgroups

|

|

1. Not at all burdensome |

Not burdensome |

|

2. A little burdensome |

Some burden |

|

3. Somewhat burdensome |

|

|

4. Very burdensome |

Very burdensome |

|

5. Extremely burdensome |

|

|

(nonresponse) |

Missing |

Both the CE Interview Survey and the CE Diary Survey respondents are supplied with an information book to assist them when they are participating in one of the surveys. Both provide response options for demographic questions and the bracket response options. The CE Interview Survey information book provides examples that can clarify the kinds of expenditures that each section/item code is intended to collect, and it also provides the response options for demographic questions and the bracket response options.

There is evidence that respondents who consult the information book more frequently provide higher quality data [i.e. fewer misclassified expenditures, less item nonresponse, and more accurate income reports; for example, Safir and Goldenberg (2008) as they are more engaged in the interview process. Thus, a higher level of information book use is preferred. At the end of both surveys, the interviewer is asked a question about information book use (as shown below). Similarly to the records use metric, an interviewer's judgement on how often a respondent used the information book is subjective. When the interview is conducted over the phone, this judgement can become even more subjective. The information book use metric is based on mapping the response options to this question as follows:

CE Diary Survey information book use question: "Was the information book used during the interview?"

Table 8. Information book use mapping CE Diary Survey

|

CE Diary Survey information book use response options |

Mapping to metric subgroups |

|

1. Yes |

Yes |

|

2. No |

No |

|

(nonresponse) |

Missing |

CE Interview Survey information book use question: "In the interview, how often did the respondent consult the information book?"

Table 9. Information book use mapping CE Interview Survey

|

CE Interview Survey information book use response options |

Mapping to metric subgroups |

|

5. Almost always (90% of the time) |

Used |

|

6. Most of the time (50% to 89% of the time) |

|

|

7. Occasionally (10% to 49% of the time) |

|

|

8. Never or almost never (less than 10% of the time) |

Did not use |

|

9. The respondent did not have access to the information book |

No information book |

|

(nonresponse) |

Missing |

The metric tabulation is conditioned by wave; the wave subgroups are wave 1, waves 2 and 3, and wave 4.

The length of interview is often used as an indicator for respondent burden; the more time needed to complete the survey, the more burdensome the survey. This is a concern because respondent burden negatively impacts both response rates and data quality. However, survey response time could also reflect the degree of engagement by the respondent, as an engaged respondent could take longer to complete the survey because they use records or more thoroughly reports expenses. In spite of the problem of ambiguity in interpreting survey response time as a standalone metric, it is nonetheless useful to track the median survey response time so that the impact of changes in survey features on this dimension of the survey design can be assessed.

The CE Diary Survey contains a personal interview component that collects information about income and demographics. This metric measures the median time to complete the personal interview component of the CE Diary Survey among respondents. This metric is not broken down by Diary Week because the survey time collected in the instrument combines both weeks.

For the CE Interview Survey, the metric tabulation is conditioned on wave; the wave subgroups are wave 1, waves 2 and 3, and wave 4.

Survey mode refers to the medium or method of administering the survey to the sample unit. The CE Interview Survey is designed to be an in-person interview, but data collection by phone, or a mix of both modes, is also permitted. This metric is based on the interviewer's report on how the interview was administered and is used to assess the prevalence of each mode. There is evidence that in-person interviews yield superior results (Safir and Goldenberg, 2008), and every year CE makes agreements with the Census Bureau that no less than a set percent of interviews can be collected over the phone. CE monitors this metric so that action can be taken if targets are not met.

At the end of the survey, the interviewer is asked a question about the mode used to collect the data. This metric is based on mapping the response options to this question as follows (see table 10):

CE Interview Survey mode question: "How did you collect the data for this (first/second/third/fourth) interview for this household?"

Table 10. Interview Survey mode mapping

|

CE Interview Survey mode Response options |

Mapping to metric subgroup |

|

1. Personal visit for all sections |

In-person |

|

2. Personal visit for all sections, but telephone follow up for some questions |

|

|

3. Personal visit for more than half the sections, the rest by telephone |

Mixed |

|

4. Equally split between personal visit and telephone |

|

|

5. Telephone for more than half the sections, the rest by personal visit |

Telephone |

|

6. Telephone for all sections |

|

|

(nonresponse) |

Missing |

The metric tabulation is conditioned on wave; the wave subgroups are wave 1, waves 2 and 3, and wave 4.

In the CE Diary Survey, the term "mode" relates to two separate aspects of the standard CE Production fielding procedures. The first is based on the primary mode of communication used to reach sampled CUs and collect data related to the case, and the second is based on the form of expenditure diary used for recording expenses over the diary placement period. These two mode considerations in the CE Diary Survey are broken out into two distinct metrics in this report. The presentation of the first metric bares some similarity to CE Interview Survey Mode, as the three possible coded outcomes are "In-Person", "Telephone, and "Missing", but the construction is much simpler. The outcome code mapping, shown in Table 11 below, is based on the diary week level values of the phase 3 production variable 'TELPV'. The TELPV data is collected by the FR after verifying that the diary case has been coded as complete at pick-up (PICK_UP1 = 201 or PICK_UP2 = 201) and thanking respondents for their time. After pick-up, the FR is instructed in the CAPI instrument to answer the question "How did you collect MOST of the data for this case? (Including follow-ups)", to which the FR can respond "By personal visit" or "By phone".

Table 11. Diary Survey Interview Phase Mode mapping

|

CE Dairy Survey placement interview mode Response options |

Mapping to metric subgroup |

|

1. By personal visit |

In-person |

|

2. By phone |

Telephone |

|

(nonresponse) |

Missing |

The second diary mode metric is slightly more complex in its estimation and requires more context on its introduction into the CE Data Quality Profile. Until July of 2020, the expenditure collection portion of the Diary Survey consisted of only the paper form diary but as part of the CE program's redesign, the BLS introduced a new online diary mode.[4] Past internal research analyzing the implementation of an online diary mode in a large-scale feasibility test (Jones, et al. 2021) found that Census field representatives rated online diary cases as being of "high quality" at a greater percentage than paper diaries. Conversely, this research also found that online diaries were associate with higher allocation rates across expenditure categories. This new diary mode, and the results mentioned above, prompted the inclusion of a new diary mode metric which will monitor the prevalence of the mode of diary data collection.

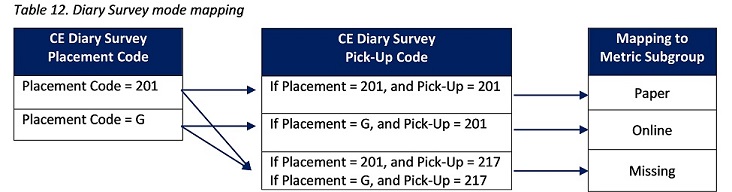

The data used to compute the diary mode metric is at the diary week level, and the metric specifically measures the proportion of total diaries placed with each of the two modes. This metric uses a combination of the codes assigned by Census field representatives during diary placement, and the codes assigned during diary pick-up. In the case of placement codes, the designation "201" indicates the successful placement of a paper diary, and "G" indicates the successful placement of an online diary.

The placement code designation "G" for the online diary cases, was a temporary measure used to identify online diaries before the official introduction of the online diary mode variable into CE Diary Production in 2022q3. With respect to the pick-up code, the value "201" indicates a complete diary case, and "217" indicates a temporarily absent non-interview, which is ultimately sorted as a missing case.

[1] Previously, the CE Interview Survey had intermittently collected information on respondent burden for research purposes, but these data are not available to the public.

[2] The Gemini Project was launched to research and develop a redesign of the Consumer Expenditure (CE) surveys, with the intention of reducing measurement error.

[3] CE Diary Survey: Type A code "217 - temporarily absent" is treated as a completed interview by CE. The Diary Survey is designed to collect data for respondents when they are at home, and the Interview Survey is designed to collect data for respondents when they are both at home and away on trips. When everyone is away on a trip in a Diary household during the Diary placement or pickup window, they are counted as completed interviews with $0 of expenditures at home. This includes respondents away at a secondary residence. Since Diary Survey and Interview Survey data are merged or "integrated" during estimation, this practice is designed to capture the right amount of expenditures.

[4] CE does not have an "Unknown eligibility" classification because the Census Bureau trains interviewers to treat any case of unknown eligibility as Type A.